When biometrics enters the news cycle, it is usually in the context of a critical discussion on the risks versus the benefits – an ethical debate, as evidenced by recent events in Afghanistan, that can be a matter of life and death. Taking a step back to a more technical level, fundamental questions arise: what is the purpose of collecting biometrics, and is it possible to process it in a way that is fit for purpose?

In this post, ahead of the upcoming DigitHarium discussions on biometrics, ICRC strategic technology advisers Vincent Graf Narbel and Justinas Sukaitis investigate how modern techniques in information security can – or cannot – be applied to the unique nature of biometrics data in the humanitarian context.

Let’s begin by stating the obvious: in principle, any collected data should have a purpose. The purpose of collecting biometrics, as its Greek roots indicate (‘bio’ meaning ‘life’, and ‘metrics’ meaning ‘measure’), is to measure a parameter of life. In other words, biometric data relate to who we are and provide the measure of a particular trait of an individual.

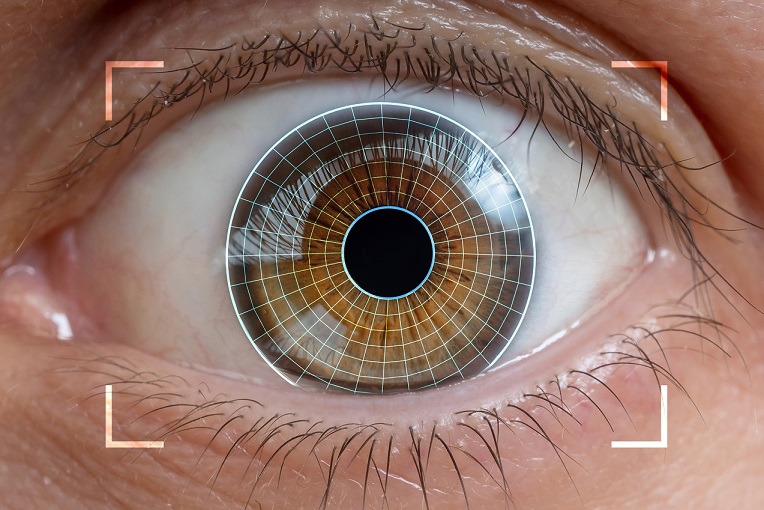

Some traits are more unique than others: we can talk about ‘soft biometrics’ such as height, age, gender, and eye color that are used to differentiate individuals but not capable of identifying them since, for example, many people have the same height or eye color. Then there are ‘hard biometrics’ traits like iris scans or vein patterns, capable of identifying any specific individual. It is this latter category, the hard traits, which are collected today in humanitarian biometrics-based registration systems.

Identity as a concept is an extremely complex topic, one that deserves a separate discussion we will not unpack here. However, it is important to discuss the process of identifying people with biometrics, involving two main modalities: the first one is to make a verification. For instance, if an individual says their name is Jane Doe, previously registered biometric data of Jane Doe are then checked against a fresh biometrics sample to verify. The second modality objective is identification. This involves matching a fresh biometric sample with stored biometric data to determine whether the person in question is in the database or not, therefore identifying them.

Humanitarian action proposes use cases for both modalities. It is critical to determine up front which one is required when a biometrics-based authentication system is designed in order to properly address the data protection risks. Let’s also not forget that biometrics only adds an additional layer of security to a process. For instance, passport biometrics confirm the person on that passport, but it cannot tell whether the passport is legitimate or not; the officer still needs to control the passport’s validity date or watermark.

Biometrics: over-purposed by nature

With the exception of some specific forensic uses, biometrics data tend to experience ‘function creep’ from the moment they are captured. One cannot restrict the collection of biometrics data to a verification or identification purpose only, and biometrics data can always reveal – now or in the future – more about the person than is needed for the original intended purposes.

For instance, iris scans or vein network imagery are used in hospitals to diagnose patients. If a humanitarian organization uses iris scans or vein network imagery for identification purposes, there is nothing preventing them a priori from using the same data to obtain information on the health of the people they’re identifying – even if this may go well beyond the scope of the collection. This is not only true for health-related secondary use; facial recognition reveals a lot of information about a person (e.g. their ethnicity, age range, etc.).

Of course, biographic data can also reveal more information than intended by the original purpose; for instance, names can reveal information about ethnicity. This can also happen indirectly, when two or more sets of data are processed together to infer new information. However, biographic data is rarely sufficient to single you out a from a group, while your iris is totally unique to you.

The takeaway here is that using biometrics data for verification or identification is very problematic from a minimization and purpose perspective. This is also one of the reasons that makes it so sensitive: when your biometrics data are in the wild, anyone who gets their hands on it will be stealing much more than your entitlement to a humanitarian distribution; they will gain the capacity to learn a lot about you, and then be able to trace you. In a humanitarian context this is extremely problematic as it violates the do-no-harm principle and because it potentially jeopardizes impartiality.

Cracking the nut

The problem to solve is to find a method where the desirable features of biometrics can be obtained without keeping the biometrics data available for secondary use. In other words, the data stored in the identification systems should not be in a form that is exploitable to deduce health conditions, ethnicity, or other problematic disclosures.

The ideal scenario would be to identify people with systems that do not expose the biometrics data, so that if data is lost or leaked it is not even recognizable as biometrics but instead look more like ‘junk data’. While this concept may sound convoluted, it has been used for decades in every password-based authentication systems, thanks to hashing functions. Hashing – a function that non-reversibly transforms a word or sentence into a unique sequence of letters and characters – allow for the storage of passwords in a way that does not reveal what the password is but still allows for it to be checked later.

At the risk of oversimplification, we need to adopt the same system for biometrics, a recommendation more easily said than done. Passwords must be always exactly the same when we type them; if even a single character is different, it will not be accepted. This is the property that allows for password hashing: every different password generates a totally different hash, and verification succeeds only when two hashes are exactly the same. In other words, the clear text password is never used directly; only the hashes are.

And therein lies the challenge: because biometrics are never exactly the same when collected (due to lighting, position, angle, dust and other factors), using hashes is prohibited. The match is decided via a probability threshold: for instance, if the two compared biometrics are 95% the same, then it is considered a match.

In its Biometrics Policy, the ICRC decided to restrict the use of biometrics when the data is stored on a token, a device that remains in the hand of the user, like a card. It comes however at the cost of some functionality, in particular for the identification modality. Whether this use is legitimate in humanitarian contexts is not the purpose here; we only observe that this scenario is not supported in token-based biometrics solution. This position is also driven by the lack of available solutions that would protect biometrics as set out above.

Research to advance this field exists but lacks resources[1], while the efforts for standardizing biometrics are also very much a work in progress. For instance, when you take a photo for your passport, there are rules and standards that you have to follow: no sunglasses, no face cover, position of eyes in photo, etc. In the case of biometrics, standardization to allow for system interoperability and prevent vendor lock-in (e.g. to replace an iris or fingerprint scanner by another provider) or to improve quality of the developments and tests (e.g. to fight biases and enable accountability in the performances) are also important.

Can we fix it?

Despite the challenges, new research in this domain exists, including on how to securely store biometrics in a centralized database or even on a public cloud. These technologies concentrate on finding new ways for processing biometric data so that it could work just like password hashing, so that it remains usable to properly identify individuals but does not reveal any information about the individual, nor possible to recreate the original biometric sample from this processed data (irreversibility).

Another key related property is revocability. Passwords can very easily be changed when compromised, but people cannot change their original biometric traits since you cannot change an iris or fingerprint. One idea is to transform biometrics data, with the help of other data, to generate a template that is revocable.

A different path of research focuses on reducing the uniqueness of the biometric data to a point where they are no longer sensitive nor do they reveal private information. These methods remove parts of the biometric sample (e.g. cutting an image into blocks and discarding most of the blocks) or obfuscate the biometric data by distorting it or adding noise to it. These transformations make it so that the stored and processed data cannot be linked to the original one entirely. It is an open question, however, whether these techniques are sufficient, in terms of privacy, to only hide part of the biometric while leaving the rest somewhat in the open.

* * * * *

We all know that technology in and of itself is neither bad nor good, but that it depends on how we choose to use it. With biometrics, these choices require a profound debate in the humanitarian sector and in broader society, one that involves ethical considerations and new legal frameworks. To fuel that debate and to go beyond declarations of intentions, it is important to have all the information available. This means, for instance, to stay mindful that even with great data protection by design processes, biometrics data remains over-purposed by design since it reveals a lot more than intended. There is therefore a certain urgency to invest more in the technical research to protect people from function creep. With this in mind, we are calling for partnerships on the issue, and in this case at least, a public-private collaboration is necessary as biometrics-based identification systems are being rolled out at increasing speed.

[1] For instance, leading work in this area is a bit outdated: the ISO standard on Biometric information protection (ISO/IEC 24745:2011 Information technology — Security techniques — Biometric information protection,) published in 2011 is only going through an update that includes modern cryptographic protection concept in 2021, while most security frameworks for biometrics are based on a model that has been invented in 2001 (Ratha model).

See also

- Ben Hayes & Massimo Marelli, Facilitating innovation, ensuring protection: the ICRC Biometrics Policy, October 18, 2019

- Faine Greenwood, Drones and distrust in humanitarian aid, July 22, 2021

- Gus Hosein, Protecting the digital beneficiary, June 12, 2018

Interesting challenge to mitigate the risks of using biometric data in ICRC for verification and identification.

Linked to the matter found this article too much of actuality about Afghanistan: https://theconversation.com/the-taliban-reportedly-have-control-of-us-biometric-devices-a-lesson-in-life-and-death-consequences-of-data-privacy-166465