International discussions have tended to focus on the compatibility of autonomous weapon systems (broadly defined by the ICRC as weapons with autonomy in their critical functions of selecting and attacking targets, and including some existing weapons) with the rules governing the conduct of hostilities under international humanitarian law and the use of force under international human rights law. These rules require a minimum level of human control be retained over weapon systems and the use of force, variously characterized as ‘meaningful’ or ‘effective’ ‘human control’ or ‘appropriate human involvement’. As such, these rules demand limits on autonomy in weapon systems.

Ethical debates

Ethical questions have often appeared something of an afterthought in these discussions. But, for many, they are at the heart of what increasing autonomy could mean for the human conduct of warfare, and the use of force more broadly. It is precisely fear about the loss of human control over decisions to kill, injure and destroy—clearly felt outside the confines of UN disarmament meetings—that goes beyond issues of compliance with our laws to encompass fundamental questions of acceptability to our values.

Can we allow human decision-making on the use of force to be effectively substituted with computer-controlled processes, and life-and-death decisions to be ceded to machines?

With this in mind, the ICRC held a round-table meeting with independent experts in August 2017 to explore the issues in more detail. (Related opinion pieces have also been published on ethics as a source of law and distancing in armed conflict).

Based on discussions at the meeting, and from the ICRC’s perspective, the ethical considerations with most relevance to current policy responses are those that transcend context—whether armed conflict or peacetime—and transcend technology—whether ‘dumb’ or ‘sophisticated’ (AI-based) autonomous weapon systems.

Human agency

Foremost among these is the importance of retaining human agency—and intent—in decisions to kill, injure and destroy. It is not enough to say ‘humans have developed, deployed and activated the weapon system’. There must also be a sufficiently close connection between the human intent of the person activating an autonomous weapon system and the consequences of the specific attack that results.

One way to reinforce this connection is to demand predictability and reliability both in terms of the weapon system functioning and its interaction with the environment in which it is used, while taking into account unpredictability in the environment itself. However, these demands are challenged by the very nature of autonomy. All autonomous weapon systems—which, by definition, can self-initiate attacks—create varying degrees of uncertainty as to exactly when, where and/or why a resulting attack will take place. Increasingly complex algorithms, especially those incorporating machine learning, add a deeper layer of unpredictability, heightening these underlying uncertainties.

So, what is ‘meaningful’ or ‘effective’ human control from an ethical perspective? One way to characterize it would be the type and degree of control that preserves human agency and intent in decisions to use force. This doesn’t necessarily exclude autonomy in weapon systems, but it does require limits in order to maintain the connection between the human intent of the user and the eventual consequences.

Limits on autonomy

To a certain extent, ethical considerations may demand some similar constraints on autonomy to those needed for compliance with international law, in particular with respect to: human supervision and ability to intervene or deactivate; technical requirements for predictability and reliability; and operational constraints on the tasks, the types of targets, the operating environment, the duration of operation and the scope of movement over an area.

These operational constraints—effectively the context of use—are critical from an ethical point of view. Core concerns about loss of human agency and intent and related concerns about loss of moral responsibility and loss of human dignity (see the report for a fuller explanation) are most acute with the notion of autonomous weapon systems used to target humans directly.

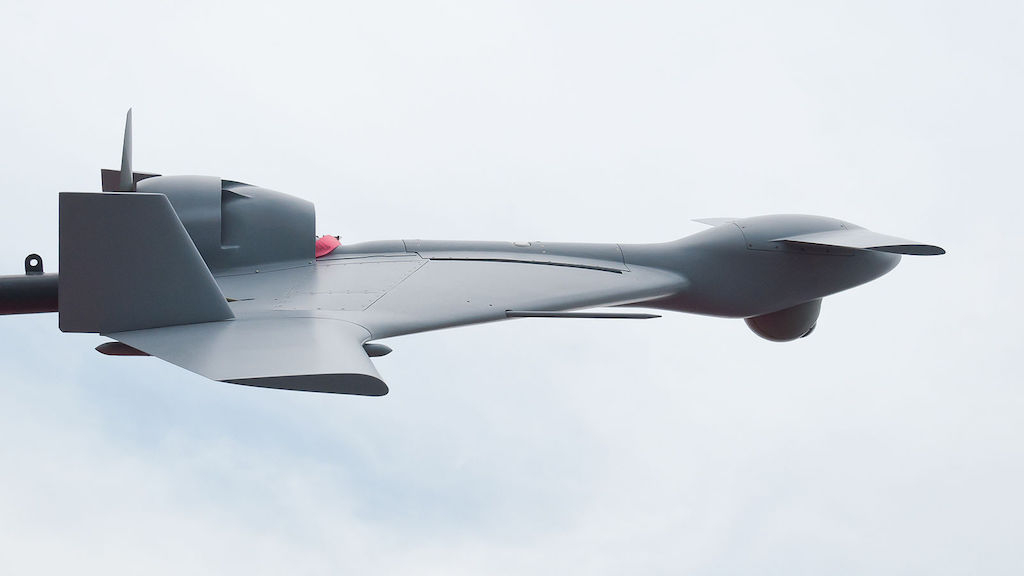

It is here that ethical considerations could have the most far-reaching implications, perhaps precluding the development and use of anti-personnel autonomous weapon systems, and even limiting the applications of anti-materiel systems, depending on the associated risks for human life. Indeed, one could argue, this is where the ethical boundary currently lies, and a key reason (together with legal compliance) why the use of autonomous weapon systems to date has generally been constrained to specific tasks—anti-materiel targeting of incoming projectiles, vehicles, aircraft, ships or other objects—in highly constrained scenarios and operating environments.

Human-centred approach

What is clear is that—from both ethical and legal perspectives—we must place the role of the human at the centre of international policy discussions. This is in contrast to most other restrictions or prohibitions on weapons, where the focus has been on specific categories of weapons and their observed or foreseeable effects. The major reason for this—aside from the opaque trajectories of military applications of robotics and AI in weapon systems—is that autonomy in targeting is a feature that could, in theory, be applied to any weapon system.

Ultimately, it is human obligations and responsibilities in the use of force—which cannot, by definition, be transferred to machines, algorithms or weapon systems—that will determine where internationally agreed limits on autonomy in weapon systems must be placed. Ethical considerations will have an important role to play in these policy responses, which—with rapid military technology development—are becoming increasingly urgent.

***

For the full report of the 2017 ICRC meeting

See also

- Laura Brunn, Autonomous weapon systems: what the law says – and does not say – about the human role in the use of force, November 11, 2021

- Introduction to mini-series: Autonomous weapon systems and ethics, 4 October 2017

- Distance, weapons technology and humanity in armed conflict, Alex Leveringhaus, 6 October 2017

- Ethics as a source of law: The Martens Clause and autonomous weapons, Rob Sparrow, 14 November 2017

- Autonomous weapon systems under international humanitarian law, Neil Davison, 31 January 2018

- Autonomous weapon systems: A threat to human dignity?, Ariadna Pop, 10 April 2018

- Human judgment and lethal decision-making in war, Paul Scharre, 11 April 2018

- Artificial intelligence and machine learning in armed conflict: A human-centred approach, ICRC, June 6, 2019

Thank you for your discussion of this subject.

The international community and the UN Security Council should give more attention to this subject.

How can some governments dare to develop weapons, which are not constantly under human control ???

International criminal law should provide for punishment in case of contravention.

What is your opinion on this subject?